The Challenges of Ever Advancing Tech

Modern electronics are nothing short of remarkable. We’ve gone from glass vacuum tubes the size of soda cans to transistors smaller than a virus particle. This progression has delivered supercomputers in our pockets and global connectivity on demand. It has also, somewhat paradoxically, made technology harder to understand at a fundamental level. A modern chip may have over a billion transistors. No human is manually routing those connections or reviewing each gate, design automation software does the heavy lifting. We trust it because we must; the alternative would take centuries and several thousand engineers working in shifts. As technology advances, we understand the big-picture architecture, but the fine details, the individual routing paths, the exact transistor-level behavior, fade into obscurity. However, this is not a new trend. High-level programming languages such as Python and C made developers more productive but disconnected them from machine code and assembler. Modern hardware design has also followed the same trajectory: we can describe a chip’s function but not necessarily explain every subtle electrical quirk. When something fails at the transistor level or behaves unexpectedly under extreme electromagnetic or thermal conditions, solving it becomes less engineering and more detective work. Add quantum effects, signal integrity challenges, and ever-tighter design tolerances, and today’s electronics often resemble black boxes. They work astonishingly well, until they don’t, and then even seasoned engineers may have no clear idea why.

Princeton Leads AI-Driven Wireless Chip Design Initiative

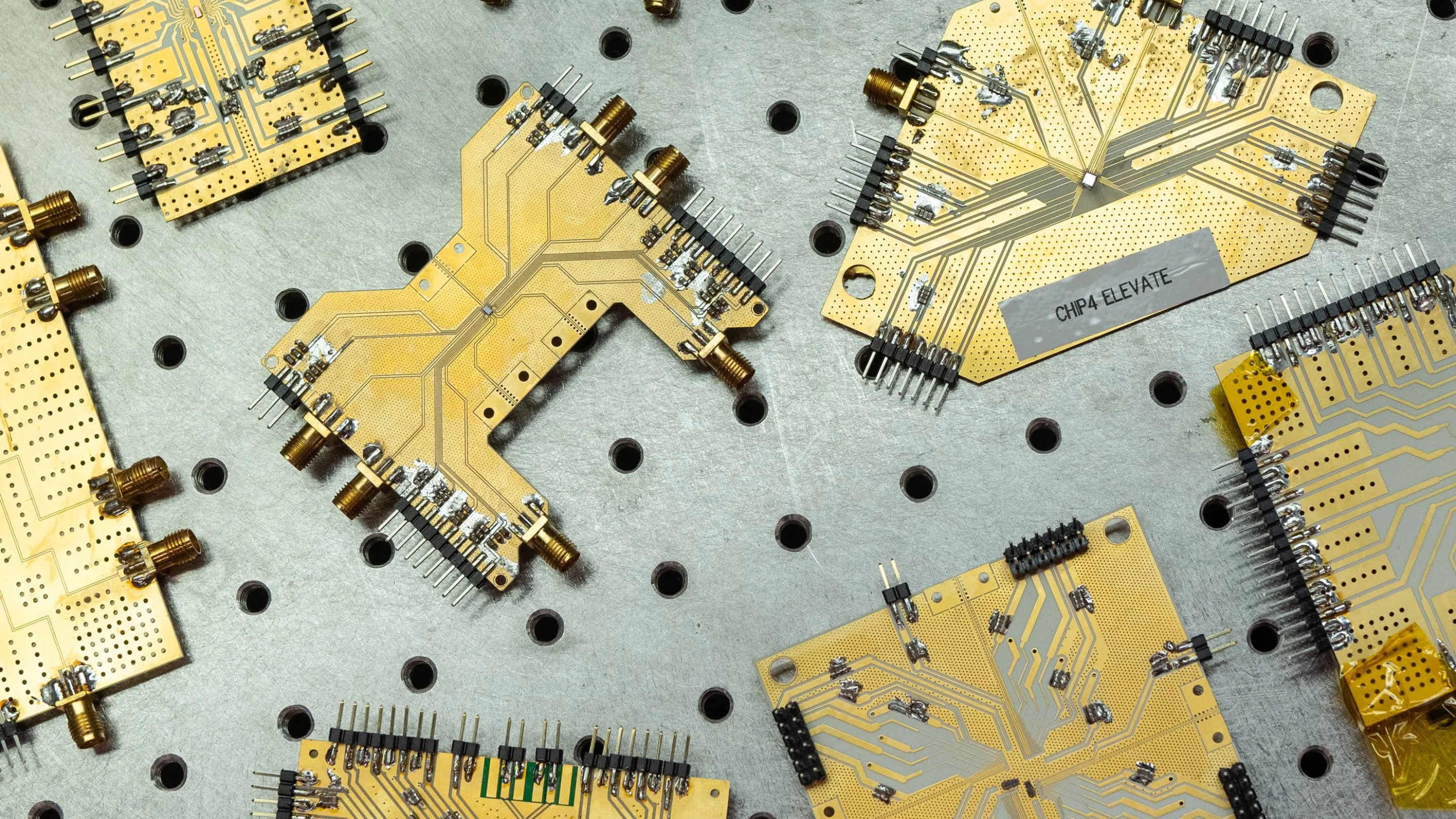

Princeton University will head a new $10 million program to develop advanced semiconductor designs for wireless communication and sensing. The project combines government funding with industry participation and focuses on automating the design of radio-frequency (RF) microchips, a task traditionally dependent on specialized engineering expertise. The effort will be directed by Kaushik Sengupta, professor of electrical and computer engineering at Princeton. His group aims to create AI-driven tools that can streamline and, in some cases, reinvent how wireless chips are designed. These chips form the backbone of modern communications infrastructure, from next-generation mobile networks and satellites to autonomous vehicles and connected medical devices.

Unlike digital processors, which already benefit from highly automated design flows, RF chips remain largely handcrafted. Their analog nature and sensitivity to environmental conditions require deep domain knowledge and time-consuming manual adjustments. According to Sengupta, this approach limits creativity and increases cost, making it harder to experiment with new architectures or unconventional circuit arrangements. The Princeton team has already demonstrated that AI-assisted design can outperform human-optimized layouts, presenting award-winning research at the 2022 IEEE International Microwave Symposium and receiving recognition from the IEEE Journal of Solid-State Circuits in 2023. Their approach flips the typical workflow, starting from system-level requirements and using AI to determine optimal circuit implementations, rather than tweaking existing manual designs.

Should We Rely on AI-Developed Hardware?

There is little doubt that AI will accelerate technological progress. Chips designed with machine learning could, in theory, deliver higher performance, lower power consumption, and greater overall efficiency than conventional designs. The question is not whether AI can design better chips, it already has, but whether we should depend on them. This question is far more than a philosophical issue. Reliability standards in critical industries, automotive, medical, defence, are built on a deep understanding of how and why a device works. If an AI generates a circuit topology that even seasoned engineers struggle to explain, how can we qualify it as safe? For example, automotive integrated circuits require AEC-Q100 certification, which demands rigorous validation of failure modes and predictable behaviour. A design that “just works” but resists explanation presents an obvious compliance problem. The risk associated with AI-designed chips further escalates in safety-critical systems. An unexplained optimization in a vehicle control module or a medical implant is not a clever quirk; it is a potential liability. If the internal workings are effectively a black box, debugging or mitigating failures becomes almost impossible. AI will undoubtedly reshape how chips are created, but unless its outputs can be fully understood and verified, we risk deploying technology we cannot fully control. And that is not innovation, it’s gambling.